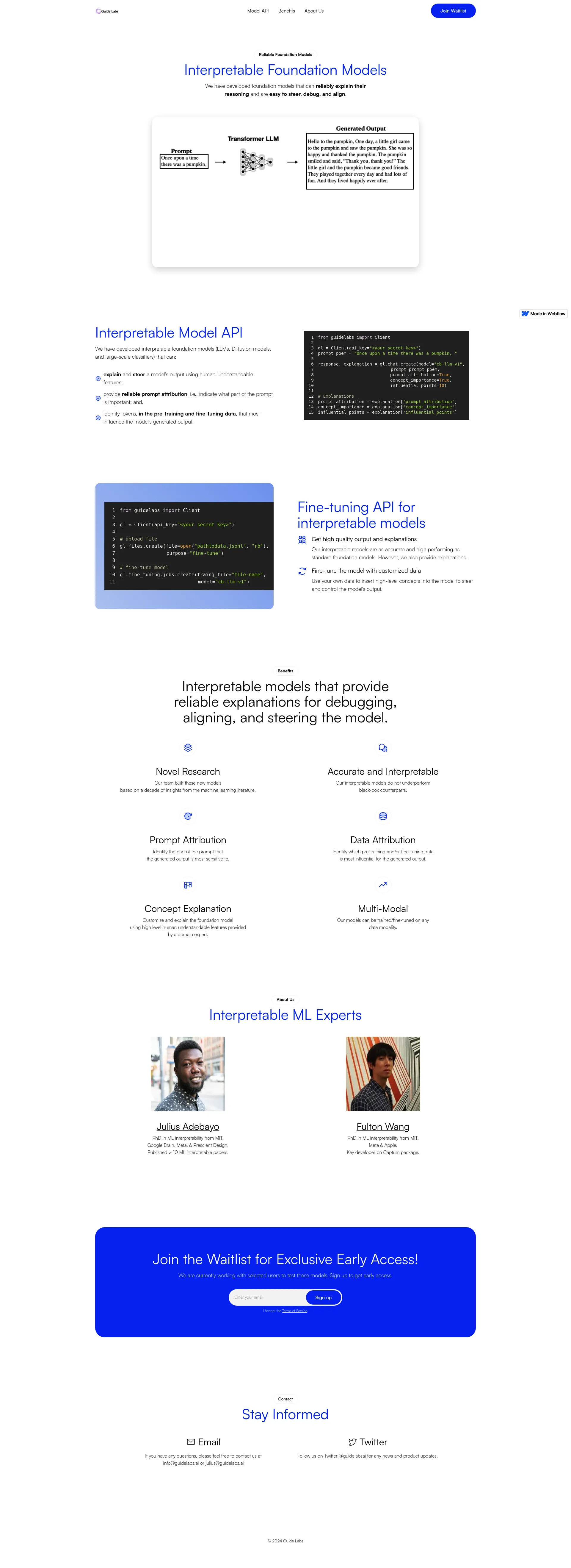

At Guide labs, we build interpretable foundation models that can reliably explain their reasoning, and are easy to align. We provide access to these models via an API. Over the past 6 years, our team has built and deployed interpretable models at Meta, and Google. Our models provide explanations that: 1) provide human-understandable explanations, for each output token, 2) which parts of the input (prompt) is most important for each part of the generated output, and 3) which inputs, in the training data, directly led to the model's generated output. Because our models can explain their outputs, they are easier to debug, steer, and align.

Guide Labs offers flexible pricing plans tailored to different needs. Contact us for detailed pricing information and custom plans.

Guide Labs is led by experts in machine learning interpretability:

Join our waitlist for exclusive early access to our interpretable foundation models.

Match with like-minded professionals for 1:1 conversations

Go from Slack Chaos to Clarity in Minutes

Personalize 1000s of landing pages in under 30 mins

The first LLM for document parsing with accuracy and speed

AI Assistants for SaaS professionals

AI-powered phone call app with live translation

Delightful AI-powered interactive demos—now loginless

AI Motion Graphics Copilot

Pop confetti to get rid of stress & anxiety, 100% AI-free

Smooth payments for SaaS