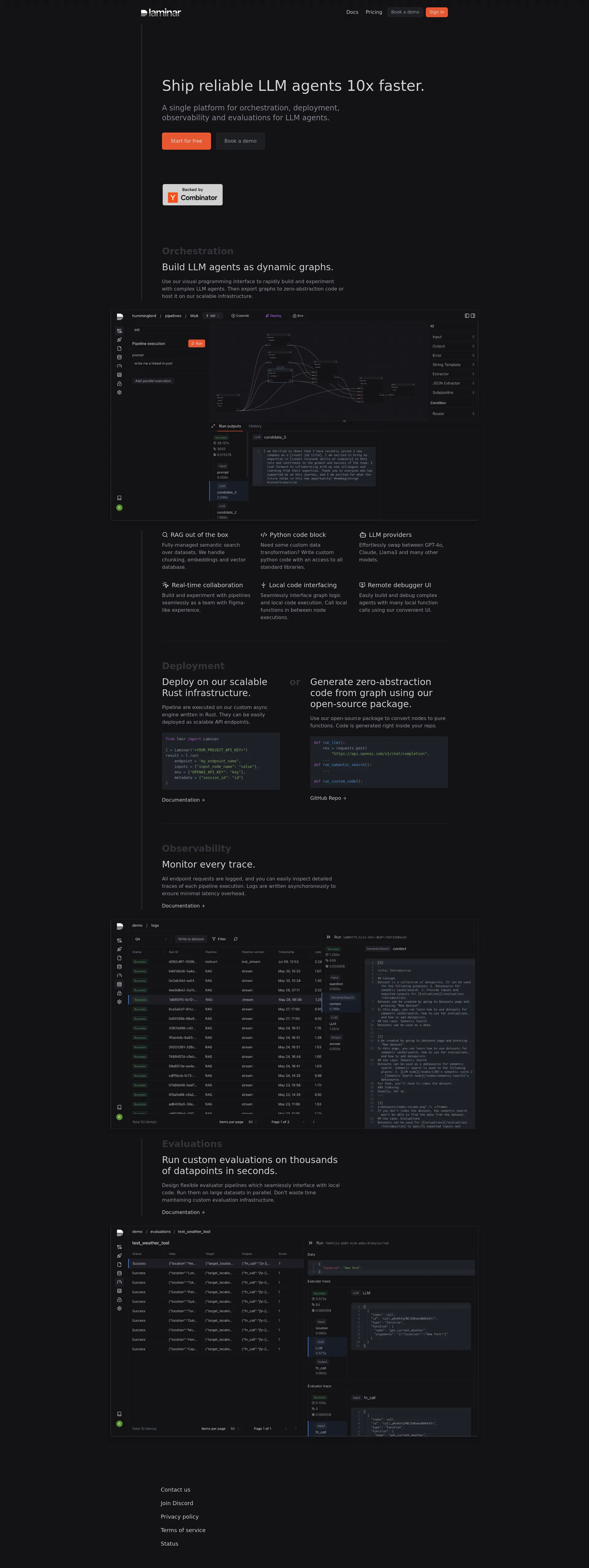

Laminar is a platform that enables AI developers to rapidly iterate on LLM applications to ensure reliability. We do this by providing: a GUI to build LLM applications as dynamic graphs with seamless local code interfacing. These graph pipelines can be directly hosted on our infrastructure and exposed as scalable API endpoints. an open-source package to generate abstraction-free code from these graphs directly into developers' codebases. a state-of-the art evaluation platform that lets users build fast and custom evaluators without managing evaluation infrastructure themselves. a data management infrastructure with built-in support for vector search over datasets and files. Data can be easily ingested into LLMs and LLMs can write to the datasets directly, creating a self-improving data flywheel. a low latency logging and observability infrastructure. Laminar combines orchestration, evaluations, data, and observability in a single platform to empower AI developers to ship reliable LLM applications 10x faster.

Laminar AI Docs is a comprehensive platform designed to streamline the orchestration, deployment, observability, and evaluation of LLM (Large Language Model) agents. With Laminar, you can build, experiment, and deploy complex LLM agents efficiently, reducing development time and enhancing collaboration.

Laminar AI Docs offers a flexible pricing model to cater to different needs:

Laminar AI Docs is designed to support collaborative efforts, making it ideal for teams:

Start leveraging the power of Laminar AI Docs to accelerate your LLM agent development and deployment today.

Testing and Simulation Stack for GenAI Systems

Tools for product teams building AI applications

Monitor, evaluate & improve your LLM apps

Building the open source standard for evaluating LLM Applications

Accelerate Development with AI-Powered, Production-Ready Code Generation

Open-source AI you can customize and deploy anywhere.

DataDog for LLM applications

AI-based full stack observability platform

Meet Tara AI, your new engineering efficiency co-pilot.

Building accessible local AI.

Match with like-minded professionals for 1:1 conversations

Go from Slack Chaos to Clarity in Minutes

Personalize 1000s of landing pages in under 30 mins

The first LLM for document parsing with accuracy and speed

AI Assistants for SaaS professionals

AI-powered phone call app with live translation

Delightful AI-powered interactive demos—now loginless

AI Motion Graphics Copilot

Pop confetti to get rid of stress & anxiety, 100% AI-free

Smooth payments for SaaS