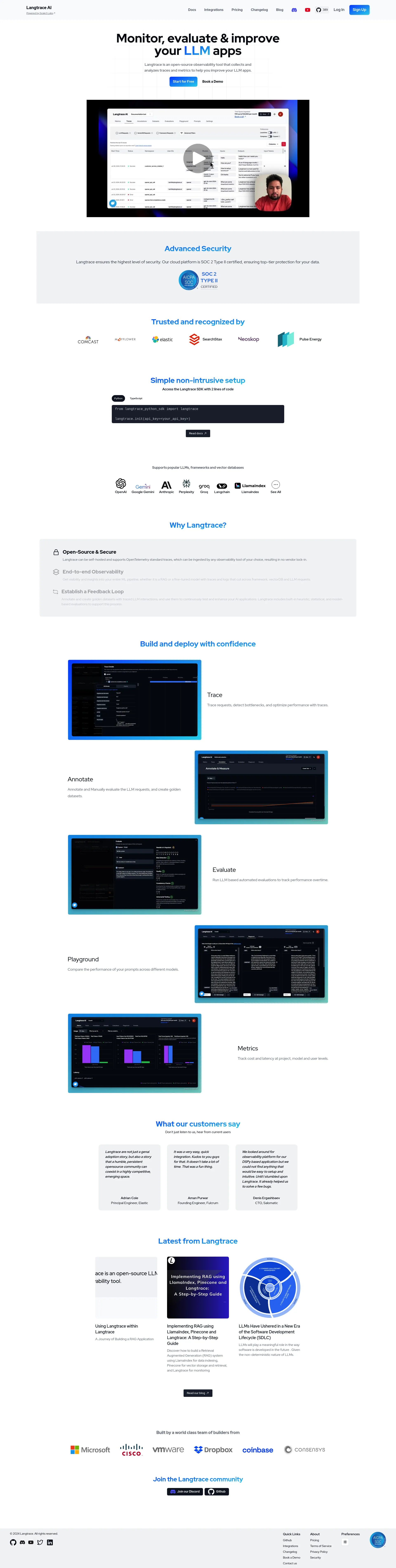

Langtrace AI is a comprehensive toolset for monitoring, evaluating, and optimizing large language models (LLMs). It is designed to enhance AI applications by providing real-time insights and comprehensive performance metrics. As an open-source observability tool, Langtrace collects and analyzes traces and metrics with the aim of enhancing LLM apps. Capable of ensuring high-level security, it is SOC 2 Type II certified for robust data protection. A notable feature of Langtrace is its simple and non-intrusive setup, which can be accessed via its Software Development Kit (SDK). Langtrace supports popular LLMs, frameworks, and vector database including OpenAI, Google Gemini, and Anthropic among others. Its core capabilities range from end-to-end observability of your machine learning pipeline, to the creation of golden datasets with traced LLM interactions for continuous testing and enhancement of AI applications. It also facilitates the comparison of performance across different models and tracking of cost and latency at various levels. Langtrace AI's community-driven nature ensures the coexistence of open-source spirit within a highly competitive space.

Langtrace is an open-source observability tool designed to collect and analyze traces and metrics, helping you enhance your LLM (Large Language Model) applications. Powered by Scale3 Labs, Langtrace offers advanced security and seamless integration with popular LLMs, frameworks, and vector databases.

Langtrace offers a flexible pricing model to suit different needs. Start for free or book a demo to explore advanced features and custom solutions tailored to your requirements.

Langtrace is built by a world-class team of builders from diverse backgrounds, committed to providing top-notch observability tools for LLM applications. Join the Langtrace community on Discord and GitHub to collaborate and stay updated with the latest developments.

Improve your LLM apps with open-source observability tool

Condense text instantly with AI precision.

Chat with top AI models in a clean, private interface.

The platform to ship reliable LLM agents 10x faster.

Open-source evaluations and observability for LLM apps

Platform to build large language model applications

Translate text with native accuracy

No-code LLM fine-tuning and evaluation.

Master any language with AI-powered learning

Testing and Simulation Stack for GenAI Systems

Match with like-minded professionals for 1:1 conversations

Go from Slack Chaos to Clarity in Minutes

Personalize 1000s of landing pages in under 30 mins

The first LLM for document parsing with accuracy and speed

AI Assistants for SaaS professionals

AI-powered phone call app with live translation

Delightful AI-powered interactive demos—now loginless

AI Motion Graphics Copilot

Pop confetti to get rid of stress & anxiety, 100% AI-free

Smooth payments for SaaS